Advanced on page SEO made simple.

Powered by POP Rank Engine™

Includes AI Writer

7-day refund guarantee

Ever wondered why traditional keyword tools often miss the mark? That’s because they rely on exact match or surface-level keyword frequency, ignoring the semantic meaning and search intent behind user queries.

Enter Natural Language Processing (NLP), the technology that powers semantic SEO, voice assistants, and intelligent search engines like Google. NLP-driven keyword research doesn’t just find matching words; it understands language like a human would.

Here’s what makes NLP-based keyword research a game-changer:

- Contextual understanding using models like BERT

- Recognition of semantic similarity between terms

- Smarter suggestions through vector embeddings

Whether you're optimizing content for voice search, building a topical map, or doing competitive analysis, mastering NLP keyword research will elevate your SEO strategy into the future.

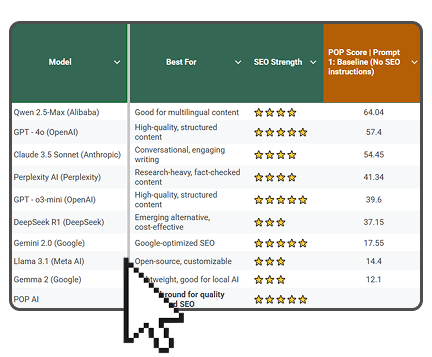

Which is the best LLM for SEO content?

Get the full rankings & analysis from our study of the 10 best LLM for SEO Content Writing in 2025 FREE!

- Get the complete Gsheet report from our study

- Includes ChatGPT, Gemini, DeepSeek, Claude, Perplexity, Llama & more

- Includes ratings for all on-page SEO factors

- See how the LLM you use stacks up

Foundations of NLP in Keyword Research

So let’s get this straight: NLP isn’t just some nerdy buzzword tossed around by engineers. It’s the reason your phone understands “Hey Google, where’s the nearest coffee shop?” and why modern keyword research goes far beyond just finding what people are typing.

A. What is NLP (Natural Language Processing)?

Think of Natural Language Processing as the bridge between human language and machines. It allows software to analyze, understand, and even generate human language, whether it’s spoken or typed.

In SEO, this means NLP can:

- Understand what a person meant, not just what they wrote.

- Detect entities like names, places, and topics in a block of text.

- Recognize search intent: Is someone asking a question? Looking to buy? Just browsing?

That’s a whole new level compared to the “stuff-keywords-and-pray” method of the early 2000s.

B. How NLP Understands Queries

Traditional tools read a phrase like:

“How do I rank better on Google?”

And treat it as: how, do, I, rank, better, on, Google.

But NLP tools? They dive deeper. They:

- Strip out stop words like “how” and “do”.

- Identify “rank better” as a semantic phrase.

- Understand that “Google” is not just a word, but a search engine entity.

- Analyze the search intent behind the phrase (informational).

Modern NLP uses models like BERT (Bidirectional Encoder Representations from Transformers) to handle this. These models look at context, not just keywords, to extract semantic meaning.

C. From Strings to Semantic Meaning

Here’s a simple breakdown:

Thanks to semantic vector embeddings, tools can now measure semantic similarity between phrases even if they use completely different words. “Best SEO tools” and “Top search optimization software”? Same intent. NLP gets it.

So if you’re still relying on tools that just count keywords… It’s time for an upgrade. With NLP, we’re not just playing the search engine game, we’re understanding the rules that drive it. And that’s how you start winning. To apply these concepts in your SEO workflow, you can use tools like PageOptimizer Pro’s Content Editor to align your content with semantic SEO best practices.

Core NLP Techniques Used in Keyword Extraction

Ever tried shouting keywords at Google and hoping for traffic? Yeah… that doesn’t work anymore. Today, keyword extraction is all about using smart NLP techniques that understand your content the way a human would.

So let’s break down the essential techniques behind NLP-powered keyword research, the tools and tricks that help you mine relevant, contextual, and high-converting keywords without the guesswork.

A. Tokenization and Normalization

Before machines can make sense of text, they have to break it down into digestible chunks.

- Tokenization splits sentences into tokens, basically, individual words or phrases.

- Normalization cleans things up by lowercasing text, removing punctuation, and even converting accented characters (like é to e).

Example:

“Natural Language Processing (NLP) is awesome!”

Becomes → natural, language, processing, nlp, awesome

This is the first step in most keyword extraction algorithms, cleaning the data so machines can make intelligent decisions.

B. Stemming vs Lemmatization

These two sound fancy, but they’re just different ways of finding the root form of a word.

Stemming

- Chops off suffixes.

- Not always pretty, but fast.

- Example: running, runner, runs → run

Lemmatization

- Smarter and cleaner.

- Uses context and grammar to find the base word.

Better becomes good, feet become feet.

Pro tip: Most modern NLP tools (like spaCy) use lemmatization because it provides more accurate results for semantic search and content clustering.

C. Named Entity Recognition (NER)

NER is like a highlighter that identifies proper nouns and specific entities in a sentence.

Here’s how it works:

Why does this matter?

Because entity extraction helps algorithms understand which terms carry real semantic weight. It’s a core part of search engine indexing and voice search optimization.

D. POS Tagging and Word Segmentation

POS stands for Part-of-Speech tagging. It’s like labeling each word with its grammatical role: noun, verb, adjective, etc.

Why it matters:

- Helps filter out fluff (like articles and conjunctions)

- Prioritizes nouns, adjectives, and verbs, the juicy parts for keyword targeting

Example:

“The best SEO tool for beginners”

POS tags: best (ADJ), SEO (NOUN), tool (NOUN), beginners (NOUN)

Meanwhile, word segmentation tackles languages where spaces aren’t used (like Japanese or Chinese). But even in English, it helps break down compound words like “icebox” or “e-commerce”.

E. Semantic Vector Embeddings (Word2Vec, BERT, etc.)

Here’s where things get fun and seriously smart.

Vector embeddings are a way to map words in a multi-dimensional space so that their meanings can be compared mathematically.

Imagine this:

- "King" – "man" + "woman" = "Queen"

- "Buy sneakers," ≈ "purchase athletic shoes"

This magic happens thanks to models like:

- Word2Vec – captures word associations in context

- GloVe – global word-word co-occurrence

- BERT is a model that understands sentence context, not just word proximity

Why it matters for keyword research:

- It groups similar keywords automatically

- Helps you discover semantically related phrases you never thought of

- Fuel's intent-based search and topical clustering

If you’re using tools like KeyBERT or OpenAI’s embeddings, you’re already leveraging this tech.

Here’s How All These Techniques Fit Together

All of these NLP techniques work together to decode your content and extract powerful keywords that match how people think, search, and speak.

Instead of chasing keyword tools that spit out the same generic terms, you’re now equipped to mine intent-rich, contextual, and search engine-friendly terms that Google loves.

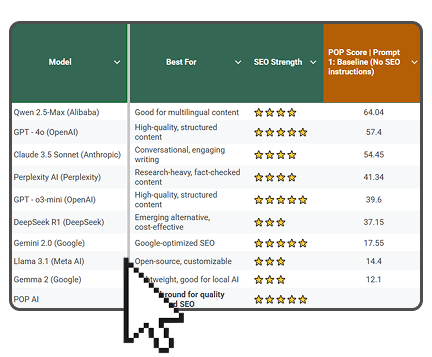

Which is the best LLM for SEO content?

Get the full rankings & analysis from our study of the 10 best LLM for SEO Content Writing in 2025 FREE!

- Get the complete Gsheet report from our study

- Includes ChatGPT, Gemini, DeepSeek, Claude, Perplexity, Llama & more

- Includes ratings for all on-page SEO factors

- See how the LLM you use stacks up

Popular NLP-Based Keyword Research Algorithms

Alright, so you’ve learned about the core NLP techniques, now it’s time to put them into action. But here’s the real question: How do you extract keywords using NLP? This is where keyword extraction algorithms come in.

These algorithms use all the semantic intelligence, vector embeddings, and linguistic tricks we covered earlier to find the most relevant, context-rich, and high-intent keywords from any block of text.

Let’s walk through the most popular NLP keyword extraction methods, each with its own strengths and ideal use cases.

1. TextRank: The OG Graph-Based Genius

If you’re familiar with Google’s PageRank, TextRank is its nerdy cousin for text. It builds a graph of words and phrases from your content and scores them based on how frequently and closely they appear together.

How it works:

- Builds a co-occurrence graph

- Applies PageRank-style scoring

- Extracts top-scoring phrases as keywords

Great for:

- Articles and blog posts

- Content summarization

- Fast, unsupervised results

Tools like spaCy + PyTextRank make using it a breeze even for beginners.

2. RAKE (Rapid Automatic Keyword Extraction)

RAKE is like the efficient coworker who gets straight to the point. It works by removing stop words, then scoring keyword phrases based on frequency and word co-occurrence.

What makes it special:

- Easy to implement with just Python + NLTK

- Scores multi-word phrases, not just single tokens

- Doesn't require external data or training

Great for:

- Product descriptions

- Niche SEO pages

- Skimming informational keywords

Example: “natural language processing algorithm” could be a high-score phrase.

3. YAKE (Yet Another Keyword Extractor)

YAKE is like RAKE’s cooler, stats-savvy sibling. It goes beyond frequency and analyzes things like position, case sensitivity, and contextual relevance to identify keywords.

Unique traits:

- Purely statistical approach

- Works well on short or noisy texts

- Handles multi-language content with ease

Great for:

- International SEO

- Short-form content or metadata

- When you don’t have a large dataset

4. KeyBERT: Powered by Transformers

If you want cutting-edge, this is it. KeyBERT uses BERT embeddings to find keywords that are contextually similar to your entire document. It's like having a mini semantic search engine just for your content.

Why it's a game changer:

- Uses cosine similarity with sentence embeddings

- Finds semantically rich phrases, not just surface-level ones

- Ideal for intent-based SEO and topic modeling

Great for:

- Long-form articles

- Landing page optimization

- Advanced content strategy

Powered by transformer models like distilbert-base-nli-mean-tokens.

5. Hybrid or Custom Models

Sometimes, you need to mix and match. Many teams combine these models, layering TextRank on top of BERT embeddings or adding TF-IDF filtering for extra precision.

You can also build custom NLP pipelines using:

- spaCy for tokenization and POS tagging

- OpenCalais for entity recognition

- KeyBERT for final selection

Great for:

- Agencies

- SaaS platforms

- Anyone needing scalable keyword extraction

Tools and APIs That Leverage NLP for Keyword Discovery

Let’s be honest, NLP keyword research sounds cool, but you’re not going to build everything from scratch using raw code and academic papers. You want tools that get the job done, fast and smart. The good news? You’ve got options. From plug-and-play APIs to open-source Python NLP libraries, there’s a tool for every use case and budget.

Here’s a breakdown of the best ones out there.

1. Wordtracker + OpenCalais

Wordtracker’s Inspect tool integrates with OpenCalais, a powerful NLP API originally developed by ClearForest and now maintained by Thomson Reuters.

What it does:

- Extracts semantic keywords and entities

- Understands context, not just string matches

- Outputs structured JSON data for easy analysis.

Perfect for:

- Competitor research

- Content audits

- Surfacing intent-rich terms that other tools miss

2. KeyBERT + Transformers

If you’re ready to get technical (but not too technical), KeyBERT is your best friend. It uses BERT embeddings to pull out the most contextually similar phrases from your content.

Why it’s amazing:

- Works straight out of the box with a few lines of Python

- Uses cosine similarity to rank keywords

- Ideal for long-form content and topic modeling

Bonus: You can customize the model using HuggingFace Transformers, like distilbert-base-nli-mean-tokens.

3. spaCy + PyTextRank

Want more control? Build your own NLP pipeline using spaCy with the PyTextRank plugin.

What you get:

- Full access to POS tagging, tokenization, and NER

- Graph-based keyword extraction with TextRank

- Ability to fine-tune based on your industry or language

Best for:

- Developers and technical SEOs

- Building scalable content workflows

- Custom keyword extraction logic

4. Enterprise Tools: Algolia, IBM Watson NLP

If you’re working on a large project or SaaS platform, consider enterprise-grade NLP solutions like:

- Algolia: Built-in semantic search with multilingual processing, typo tolerance, and ranking algorithms

- IBM Watson NLP: Robust entity extraction, sentiment analysis, and advanced query understanding

These tools offer more than just keyword suggestions; they help power entire search and discovery platforms.

Workflow: How to Perform NLP Keyword Research Step-by-Step

So you’ve got the tools, the algorithms, and a growing love for semantic search. But now comes the real magic: putting it all together into a repeatable workflow. Whether you're optimizing a blog post or planning a massive content strategy, this NLP-powered keyword workflow keeps things sharp, efficient, and Google-approved.

Let’s walk through the process step by step

Step 1: Input Your Seed Content

Start with a piece of text, this could be a webpage, competitor blog, or even your long-form article. Tools like Wordtracker, KeyBERT, or a basic Python script will analyze this content for keyword extraction.

Use:

- Existing articles

- Competitor pages

- Support docs, case studies, or transcripts

Step 2: Preprocess the Text

Before diving in, run basic text preprocessing. This includes:

- Tokenization: Break the text into words and phrases

- Normalization: Lowercasing, removing punctuation, cleaning accents

- Stop word removal: Get rid of words like “the,” “and,” or “is”

Tools like spaCy or NLTK make this painless.

Step 3: Extract Semantic Keywords

Now the fun part. Choose your algorithm:

- Use RAKE or YAKE for fast statistical extraction

- Try TextRank for graph-based summarization.

- Go with KeyBERT for contextual phrase detection.

Each method will give you a ranked list of relevant terms, often with scores for importance or semantic similarity.

Step 4: Analyze Relevance & Intent

Not all keywords are created equal. Group your results into search intents:

This step is crucial for content mapping and SERP targeting.

Step 5: Refine with Vector Embeddings & TF-IDF

Want that extra edge? Combine TF-IDF scoring with vector similarity models like BERT or Word2Vec. This helps:

- Weed out generic terms

- Boost context-rich, intent-aligned phrases.

- Build topical clusters for internal linking.

Final Thoughts & Future Trends

Let’s face it, keyword research isn’t just about stuffing in high-volume terms anymore. It's about understanding search intent, semantic relationships, and how users think and speak. And thanks to Natural Language Processing (NLP), we’re finally doing that at scale.

Shortly, expect even more advanced tools built on large language models (LLMs) like GPT, BERT, and Gemini to dominate the keyword game. These models don’t just find keywords, they find concepts, themes, and user intent behind every query.

What’s coming next?

- Multilingual semantic search across global markets

- Zero-click search optimization via entity-rich snippets

- Voice-first SEO that leverages conversational NLP

- Topic clustering driven by real-time vector embeddings

Your job? Stay ahead of the curve by embracing tools that understand meaning, not just match words.

In short, NLP has taken keyword research from a mechanical checklist to a smart, intuitive process. If you’re not leveraging it, you're not just behind on SEO, you’re behind on how the internet thinks. So go deeper. Think context. Think meaning. Think NLP.Foundations of NLP in Keyword Research